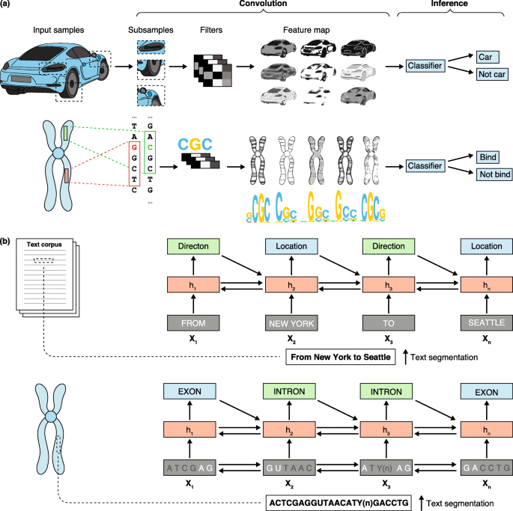

Computer vision is an interdisciplinary field that focuses on acquiring, processing, and analyzing images and/or video. Computer vision algorithms ingest high-dimensional image data and synthesize (or ‘convolute’) it to produce numerical or symbolic representations of concepts that are embedded in the image. This process is thought to mimic the way humans identify patterns and extract meaningful features from images. The main steps in computer vision consist of image acquisition, pre-processing, feature extraction, image pattern detection or segmentation, and classification. Deep-learning algorithms such as CNNs have been designed to perform computer vision tasks. In simplified terms, a typical CNN tiles an input image with small matrices known as kernel nodes or filters. Each filter encodes a pixel intensity pattern that it ‘detects’ as it convolves across the input image. A multitude of filters encoding different pixel intensity patterns convolve across the image to produce two-dimensional activation maps of each filter. The pattern of features detected across the image by these filters may then be used to successively detect the presence of more complex features (Fig. 1).

Fig. 1

Examples of different neural network architectures, their typical workflow, and applications in genomics. a Convolutional neural networks break the input image (top) or DNA sequence (bottom) into subsamples, apply filters or masks to the subsample data, and multiply each feature value by a set of weights. The product then reveals features or patterns (such as conserved motifs) that can be mapped back to the original image. These feature maps can be used to train a classifier (using a feedforward neural network or logistic regression) to predict a given label (for example, whether the conserved motif is a binding target). Masking or filtering out certain base pairs and keeping others in each permutation allows the identification of those elements or motifs that are more important for classifying the sequence correctly. b Recurrent neural networks (RNNs) in natural language processing tasks receive a segmented text (top) or segmented DNA sequence (bottom) and identify connections between input units (x) through interconnected hidden states (h). Often the hidden states are encoded by unidirectional hidden recurrent nodes that read the input sequence and pass hidden state information in the forward direction only. In this example, we depict a bidirectional RNN that reads the input sequence and passes hidden state information in both forward and backward directions. The context of each input unit is inferred on the basis of its hidden state, which is informed by the hidden state of neighboring input units, and the predicted context labels of the neighboring input units (for example, location versus direction or intron versus exon)